How We Use A/B Testing to Grow Our Business

Making a change to your website usually goes like this:

We need to make this page better, let’s make X changes.

Then you do one of two things.

1. Get a designer to mockup changes, argue about changes internally before coming to a decision and implementing.

2. Make the changes without any discussion or testing.

Everyone says “wow that looks great, so much better!” Go to the next page and repeat.

After you spent all that time making changes, did your sales go up? Click-through rates? Email opt-ins?

Did any of those metrics actually get worse?

It’s hard to say unless you test. You might be making changes that look better to you, but are actually hurting your business.

A/B testing helps you avoid this scenario by giving you concrete data on how your changes perform.

We have successfully used A/B testing to increase traffic down our funnel, resulting in more sales. We’ve also had a lot of unsuccessful or inconclusive tests, and had conversations about whether A/B testing is helpful at all.

Here are some successful tests we’ve done, with a couple important caveats.

How We A/B Test

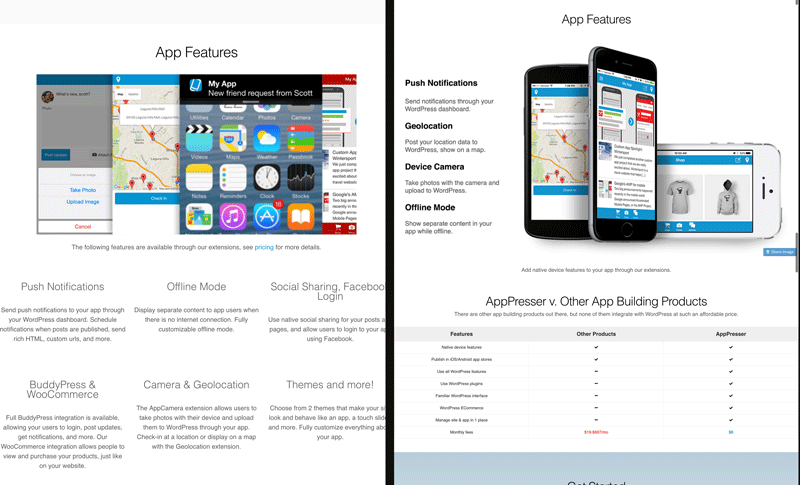

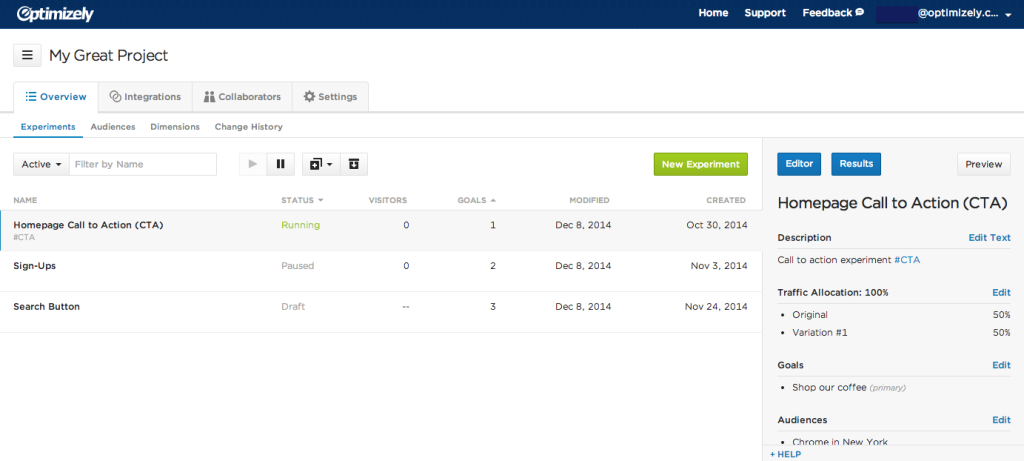

We use Optimizely to setup our tests. It allows you to setup tests quickly, view statistics, and do different types of tests.

There are other great tools out there, such as Visual Website Optimizer. What tool you use is not as important as your testing process. It’s important to think before you test, because if you just test blindly, you’ll end up with very little to show for it.

Here’s how we go about A/B testing.

Get Your Story Straight

Before we started testing, we figured out our company story. We had to make sure our messaging was clear, because no amount of design tweaks will help much if you aren’t communicating well to your customers.

We plotted out how we could clearly communicate our message on our website, and then set out to ensure each page was doing a good job of it.

Figure Out Your Funnel

Next we looked at our funnel, which goes something like this: Homepage => Features => Pricing => Checkout => Purchase Confirmation. Of course there are tangents in that funnel, no one goes through in that order. The important thing is to see where people are dropping off, try to stop the leaks, and get more people to purchase.

Tip: You will want to track conversions through your funnel. We have funnel tracking in Woopra, you can also setup a conversion funnel in Google Analytics.

Move People Down the Funnel

The idea is to move people from the top of the funnel to the bottom. In our case, that means from the homepage to purchase confirmation.

We started at the top of the funnel, because that is where the most people enter. Starting with the homepage, how can we clear up our messaging, and get more people down the funnel?

Looking at the homepage, we found parts that were confusing or misleading, and planned out some changes. Once we knew what we wanted to do, we setup a test in Optimizely.

The great thing about Optimizely is that you can make changes using their visual editor, without actually building out a new page in WordPress. This allows you to test changes quickly, and not spend development time unless you see results.

Some of Our Tests

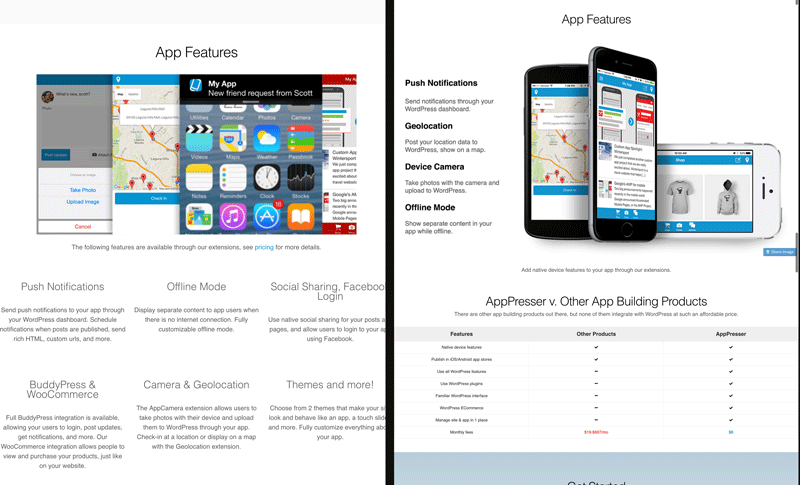

Here are the original and variation home pages that we tested. (The original is from the internet archive, it’s a little tweaked)

- Variation

- Original

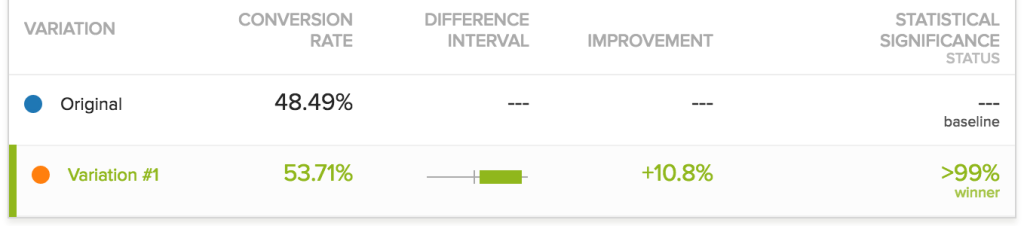

The changes we made to our homepage resulted in a 10% increase to the pricing page.

This may not seem like a lot, but if we make 10% increases in each part of the funnel, we are talking about thousands of people each month.

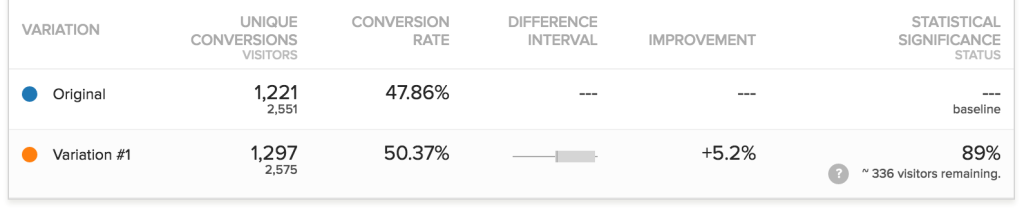

Another test we ran on our features page saw about a 5% increase from the features page to pricing.

Again, not a huge win, but 5% is something.

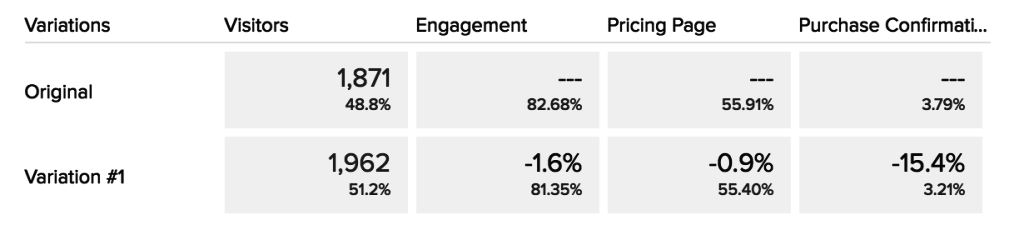

Other tests we have run show no improvement, or no statistical significance. For example, we have a test running right now on our features page that has been going for over a month, with negative results.

We didn’t make any big changes, we added a “view pricing” button and changed the display of the app features. I think the reason we haven’t seen good results is because the changes were too small. Focusing on big changes is what gets you the real results.

Right now we are running a test on our pricing page that I am very optimistic about, but it will take a while for us to reach statistical significance.

Keep in mind that as you go further down your funnel, traffic decreases, so it takes much longer to get stats. If you run a test on your checkout page, and you only have a few sales a day, it may take a few months to get a final result.

Tips

Here are a few tips we’ve picked up from our testing:

- Get the big picture figured out. If you don’t have your messaging down, no amount of testing will show big gains.

- Implement tests quickly with Optimizely’s visual editor to save time. Do as little development as possible before getting a test up and running.

- Test big changes, not button colors. Try different messaging, new headlines, be bold.

- Always have a test running.

- Keep a larger perspective when designing tests. More on that below.

When A/B Testing Is Not the Answer

A/B testing is a double-edged sword.

On one hand, being a data-driven company is what we should all be striving for. A/B testing gives you hard data, which helps you make confident decisions. We’ve seen some decent wins in our own testing with very positive results.

On the other hand, data without context can cause you to make poor decisions. For example, let’s say I run an A/B test where I show 50% of my visitors a coupon, and test for purchase conversions. Obviously the customers that received the coupon will convert higher, but does that really help me in the long run?

That’s a really obvious example, but there are much more subtle ways in which A/B testing can be deceptive. Let’s say you make a change to hard-sell your customers using slimy marketing tricks, which results in a higher conversion rate. That may win an A/B test, but damage your brand in the long run.

When zooming in on details like “does a blue or a red purchase button convert better?”, it’s important to keep perspective on your larger business goals.

When NOT to Test

There may be times where you are so certain of where you want your company to go, that you don’t need to test.

Tony Perez recently wrote an article on his pricing journey at Sucuri Security. He made one of the biggest changes to his pricing without any testing, and it definitely would have lost an A/B test. However, it resulted in a positive long term change for his business.

We made our biggest pricing increase to the core product in our 6 year history on February 18th, 2015…For about three weeks after the change we lost close to 40% of our signups, we spent a lot of time changing our underpants.

It was perhaps the scariest moment in our history, in which both Daniel and I barely slept and repeatedly tested the signup process to makes sure it was functional. Within 48 hours of the release we knew we had a problem, but we also knew that changing too quickly would be ill-advised; we would have to reach deep and see if the market normalized.

The market normalized, and within a month that 40% reduction, turned to a 10 – 15% drop, which when you compare to the price change was more than acceptable.

I know for a fact that Tony believes in A/B testing, but there are times when you have to go with your gut. Sometimes that works out, sometimes it doesn’t.

There are lots of people who would tell you A/B testing is a waste of time. Does that mean you shouldn’t A/B test?

Nah. Don’t listen to them.

Usually people who say that just mean that you shouldn’t lose sight of your larger business goals while examining what button color gets the most clicks.

Keep testing. Just make sure that you don’t lose the forest for the trees.

Hey Scott

Really good post. I’m curious…

What was the net conversion change on purchase? what was your goal in optimizely? Were you looking for any click, or did you focus on only your buy buttons, or does it account for your contact as well?

In other words, you saw 10% on the Pricing page, then another 5% from the features to the Pricing page. That’s all great, but what did that translate from Pricing to Purchase? Was it simply people clicked for the sake of clicking out of curiosity? If there was no change on purchases, was in fact valuable? or are you measuring for the sake of measuring?

I love this part though:

Also, how long did you run the tests and at what confidence level? Did you have a representative sample?

Think this info would be really insightful.

Sheesh Tony, you just had to give me the 3rd degree 😉

Our main goal was to reduce the amount of people leaving, and get more of them to continue down our funnel. We tracked general engagement and clicks to the pricing page for those particular tests. Other tests we focus on actual purchases, and we also have data in Woopra and Google Analytics to view our funnel conversion rate. We refer to optimizely’s statistical significance algorithms.

It’s hard to relate everything you test directly to purchases, there are too many factors involved to get clear data for some tests. That’s why it’s so important to have the bigger picture in mind, like we talked about.

That’s why I quoted you, because I agree that A/B testing is not always the answer.

Hey @scott

HAAH.. sorry not trying to give a 3rd degree.. honestly trying to understand the information you’re sharing.

You’re absolutely right, reading this I was not sure what the goal, intent, of the test is so it’s hard to appreciate good from bad in the tests. If you’re intent was to get people through to the next phase, that’s great, the problem with that is that on the page there seem to be multiple funnels.

i.e., you have:

– Cart

– Account

– Pricing

– Contact

That’s only four, not including all the other navigation and footer links. I say this because I’ve seen this before. So you say they go down the funnel to the next phase. Perfect. Which funnel exactly? And was that funnel the one you wanted them to go down?

Also, very familiar with Opitmizely and their statistical significance algorithms. So what was your confidence level on these? For instance, if you say it was 60%, that’s essentially a coin toss.. 6 out of 10 times your test will be accurate.. : / I think that’s my point in most of these measurement tests, without that kind of context data, it’s hard to decipher.. so is this good or bad?

🙂

Well with that homepage experiment it was 99% significance, Optimizely usually tells you when you can end the test.

Funnels are not always linear, people take different paths but the goal is to go from landing page => Pricing => Checkout => Purchase. It doesn’t matter if they go Home => features => home => contact => pricing => blog => pricing => checkout => purchase. The funnel is still the same.

Of course you can have multiple funnels, but the purchase one is most important.